Osaurus

1 like

Native, Apple Silicon–only local LLM server. Similar to Ollama, but built on Apple's MLX for maximum performance on M-series chips. SwiftUI app + SwiftNIO server with OpenAI-compatible endpoints.

Features

- Dark Mode

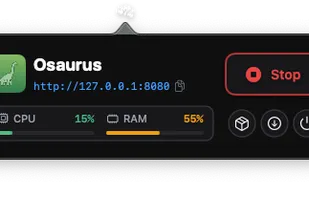

- Sits in the MenuBar

- AI-Powered

- OpenAI integration

- Apple Silicon support

Tags

- local-ai

- ai-models

- ai-model-integration

- openai-api

- llm-integration

- large-language-model-tool

Osaurus News & Activities

Highlights All activities

Recent activities

- bnchndlr liked Osaurus

POX added Osaurus as alternative to Alice AI Assistant

POX added Osaurus as alternative to Alice AI Assistant- POX added Osaurus as alternative to Ollama, GPT4ALL, Jan.ai and Open WebUI

- POX added Osaurus

Osaurus information

No comments or reviews, maybe you want to be first?

What is Osaurus?

Native, Apple Silicon–only local LLM server. Similar to Ollama, but built on Apple's MLX for maximum performance on M-series chips. SwiftUI app + SwiftNIO server with OpenAI-compatible endpoints.

Created by  Dinoki, a fully native desktop AI assistant and companion.

Dinoki, a fully native desktop AI assistant and companion.

Highlights:

- Native MLX runtime: Optimized for Apple Silicon using MLX/MLXLLM

- Apple Silicon only: Designed and tested for M-series Macs

- OpenAI API compatible: /v1/models and /v1/chat/completions (stream and non-stream)

- Function/Tool calling: OpenAI-style tools + tool_choice, with tool_calls parsing and streaming deltas

- Chat templates: Uses model-provided Jinja chat_template with BOS/EOS, with smart fallback

- Session reuse (KV cache): Faster multi-turn chats via session_id

- Fast token streaming: Server-Sent Events for low-latency output

- Model manager UI: Browse, download, and manage MLX models from mlx-community

- System resource monitor: Real-time CPU and RAM usage visualization

- Self-contained: SwiftUI app with an embedded SwiftNIO HTTP server