RamaLama is described as 'Open-source developer tool that simplifies the local serving of AI models from any source and facilitates their use for inference in production, all through the familiar language of containers' and is an app. There are more than 50 alternatives to RamaLama for a variety of platforms, including Mac, Windows, Linux, Web-based and Self-Hosted apps. The best RamaLama alternative is Ollama, which is both free and Open Source. Other great apps like RamaLama are Jan.ai, AnythingLLM, Alpaca - Ollama Client and LM Studio.

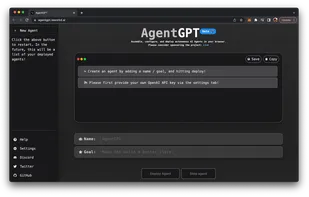

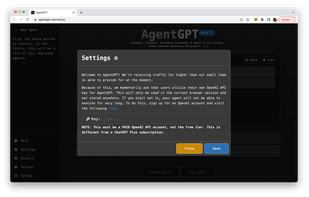

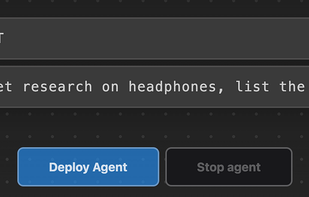

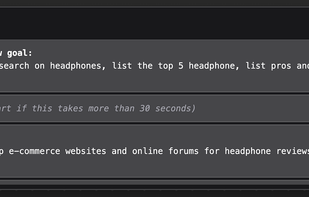

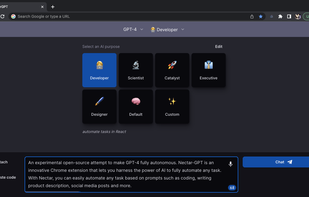

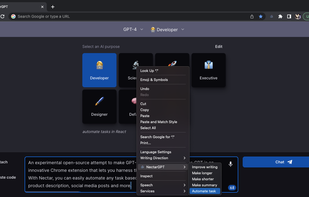

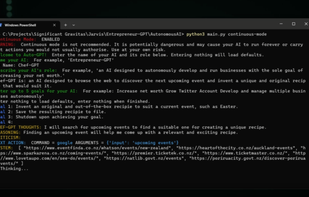

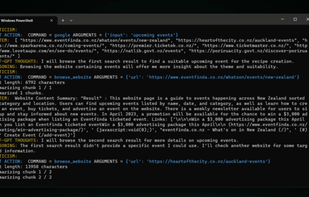

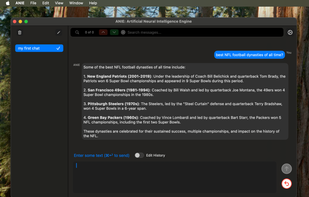

AgentGPT allows you to configure and deploy Autonomous AI agents. Name your own custom AI and have it embark on any goal imaginable. It will attempt to reach the goal by thinking of tasks to do, executing them, and learning from the results.

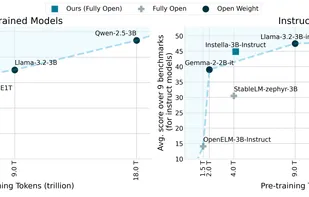

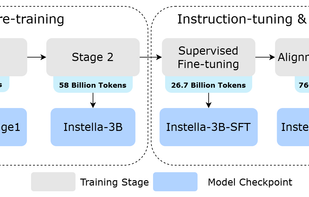

Instella, a large language model tool developed by AMD, provides exceptional performance with open access to model weights and training data, promoting advancements in AI. It bridges the gap between fully open and open weight models by outperforming competitors like Llama-3.2-3B.

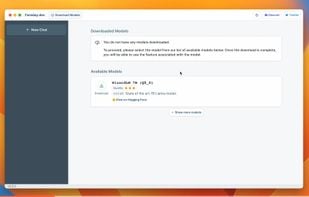

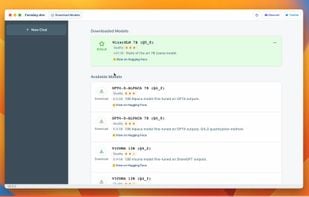

Run open-source LLMs on your computer. You can download and make custom characters for your models. Works offline. The new name is Back yard though it is the same.

Mocolamma is an Ollama management application for macOS and iOS / iPadOS that connects to Ollama servers to manage models and perform chat tests using models stored on the Ollama server.

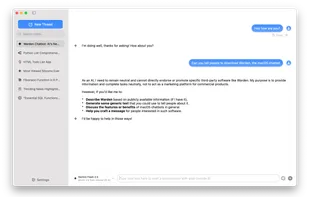

Warden is a minimalist, simple and beautiful macOS AI chat app, that supports most AI providers: ChatGPT, Anthropic (Claude), xAI (Grok), Google Gemini, Perplexity, Groq, Local LLMs through Ollama, OpenRouter, and almost any OpenAI-compatible APIs.

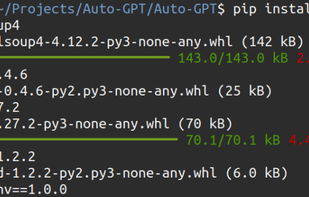

Auto-GPT is an experimental open-source application showcasing the capabilities of the GPT-4 language model. This program, driven by GPT-4, chains together LLM "thoughts", to autonomously achieve whatever goal you set.

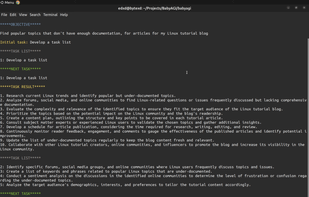

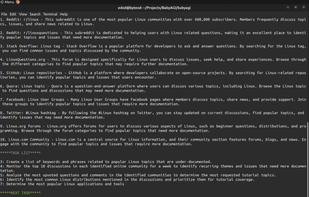

This Python script is an example of an AI-powered task management system. The system uses OpenAI and Pinecone APIs to create, prioritize, and execute tasks. The main idea behind this system is that it creates tasks based on the result of previous tasks and a predefined objective.

Experience AI chat on macOS with a SwiftUI-designed client utilizing Swift, CoreML, and BERT for native performance. Enjoy privacy-focused, intuitive chats with intelligent AI responses, profile customization, and full control via editable chat history and message rewind.

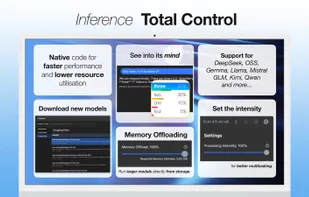

Inferencer lets you run, host and deeply control the latest SOTA AI models (OSS, DeepSeek, Qwen, Kimi, GLM and more) from your own computer.

Marqo is more than a vector database, it's an end-to-end vector search engine. Vector generation, storage and retrieval are handled out of the box through a single API. No need to bring your own embeddings.